The Vault Java SDK is a powerful tool in the Vault Platform, allowing developers to extend Vault and deliver custom capabilities and experiences to Veeva customers. It provides a completely new experience in developing industry cloud applications, leveraging industry standard tools to develop and debug and integrating seamlessly with Vault in the cloud.

Develop: Developers can code in Java and debug using their favorite IDE by simply attaching to a Vault in the cloud. There is no need to learn a proprietary programming language or use unfamiliar development tools.

Upload: Developers or Vault Admins can easily upload source code to Vault with a familiar tool used in configuration migration. Source code is stored in Vault as metadata which is easily migrated from Sandbox to Production Vaults.

Deploy: Once the source code is uploaded, it is compiled and loaded in real-time. Custom logic is applied immediately. Developers and Admins can use the Debug Log to monitor and troubleshoot issues in the deployed code, and both the Admin UI and Vault API to manage deployed code. Learn more about deploying code to Vault.

The 22R2 release added the Run Custom Code as Java SDK Service Account setting to Admin > Settings > General Settings. If the Run Custom Code as Java SDK Service Account setting is enabled in your Vault:

The Java SDK Service Account user appears in the Users & Groups Admin tab in all Vaults but does not affect license counts.

In 22R2, this setting was off by default, but Admins could enable it from the General Settings page.

Default behavior for this setting will change as follows in upcoming releases:

Developers can use the Vault Java SDK to extend Vault by implementing custom code, such as triggers and actions.

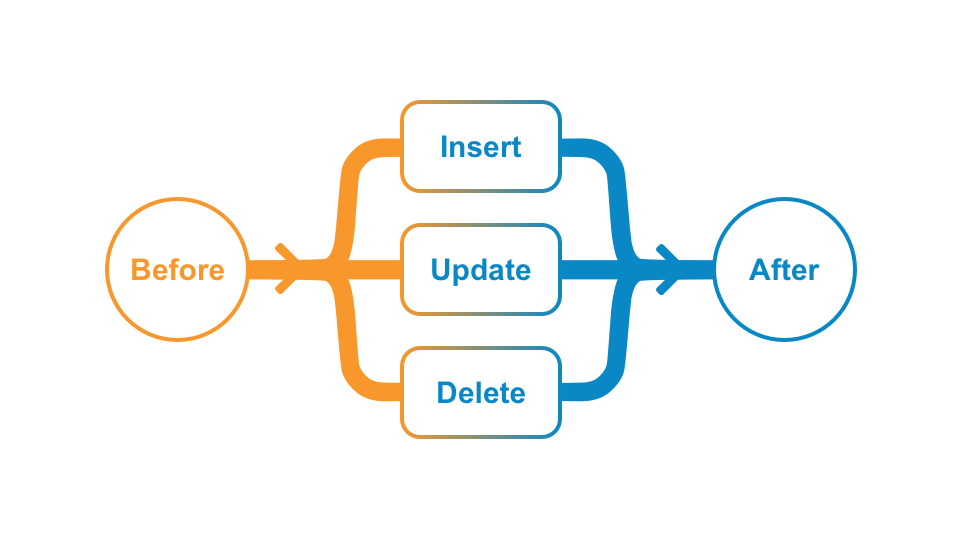

INSERT, UPDATE, or DELETE) occurs on an object record. Developers can place custom logic BEFORE or AFTER the operation. Learn more about record triggers.

Record Actions: Execute custom code when a user invokes a user action on a record. Learn more about record actions.

Document Actions: Execute custom code when a user invokes a user action or when a document enters a certain lifecycle state. Learn more about document actions.

To develop with the Vault Java SDK, you need all of the tools for Java development, such as a Java Development Kit (JDK) and an Integrated Development Environment (IDE). You will also need a Vault to test your new Vault extensions before deploying them to production.

To get more help getting started, you can post or find an answer in the Vault for Developers community on Veeva Connect.

In this step, you will:

As the first step in the Getting Started, you need to configure your Vault with the provided SDK record trigger. Besides the custom code for the record trigger itself, the sample trigger runs on the vSDK Hello World object, which you must also add to your Vault. Instead of manually configuring your Vault, you will deploy a prepackaged set of components called a Configuration Migration Package, or VPK (.vpk). This VPK is included in the downloaded vSDK Hello World project, and you can import this into Vault in just a few clicks.

In the future, you can also use this VPK process to copy the configuration of your production environment and replicate it in your sandbox Vault. You can learn more about Configuration Migration Packages (VPKs) in Vault Help.

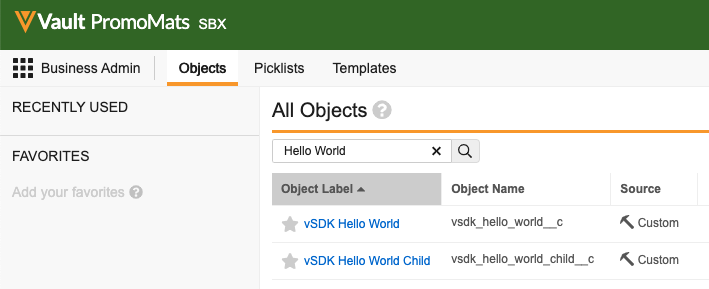

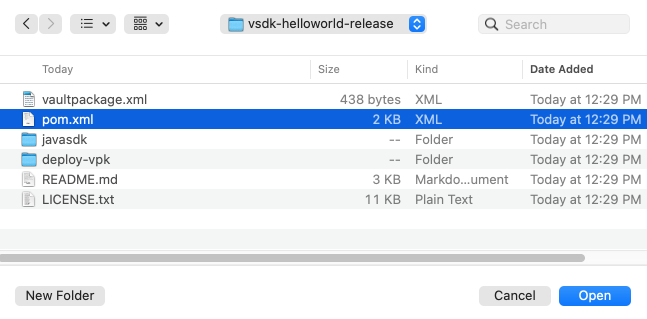

deploy-vpk/vsdk-helloworld-components.vpk file in your downloaded or cloned project folder. The import should take less than a minute to complete. Refresh the page to find the imported package.replace_all Vault Java SDK deployment option, which ensures your getting started environment will not have any conflicting configuration. You can learn more about replace_all and other Vault Java SDK deployment options in the SDK documentation. When the deployment is complete, you will receive a notification. Deployment should take less than 5 minutes.vsdk_hello_world__c) and vSDK Hello World Child (vsdk_hello_world_child__c) objects exist here, the VPK imported the Vault objects successfully!

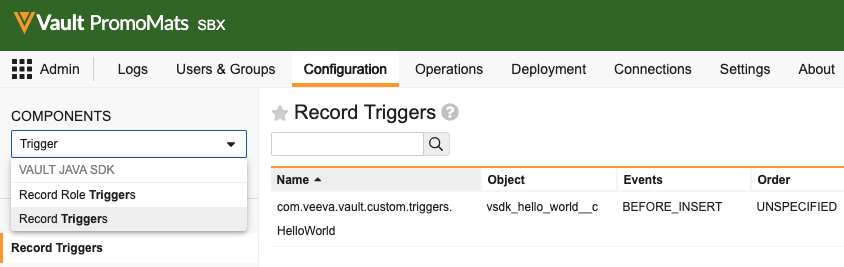

com.veeva.vault.custom.triggers.HelloWorld exists here. If the HelloWorld trigger exists, your Vault setup is complete! You can learn more about the information and options available on this page in Vault Help, such as how to download deployed code and how to turn deployed code on and off.

replace_all and other Vault Java SDK deployment options in the SDK documentationIn this step, you will:

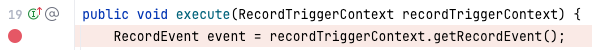

The HelloWorld record trigger executes at the BEFORE_INSERT event on the vsdk_hello_world__c object. This means the trigger will execute custom code right before an object record is saved, and that this trigger only executes on vsdk_hello_world__c object records. All other objects would not trigger this record trigger.

When triggered, the custom code written for the HelloWorld trigger will set a value in the Description field on the triggering Vault object record.

To execute the HelloWorld record trigger:

BEFORE_INSERT event, which triggers the HelloWorld trigger to add a value to the Description field before the record is saved. Once the record is saved, you should see the Description field was set to “Hello, {name}!”. That’s your record trigger in action!In this step, you will:

Now that you’ve set up your Vault and executed a record trigger in the Vault UI, you can move on to writing your own custom code. To do this, let’s start with setting up your development environment.

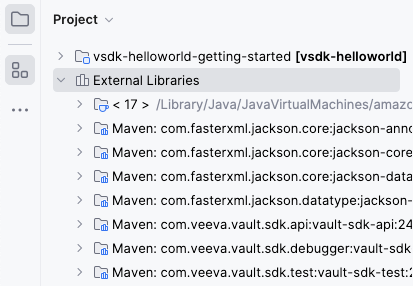

Maven: com.veeva.vault.sdk.api:vault-sdk-api library is present in the External Libraries section. If this file is not present or the External Libraries section is empty, make sure you have access to the Internet and your browser can load repo.veevavault.com. You should also make sure your POM file is set up with the correct Vault SDK version.

The Java SDK version in IntelliJ® must match the version you installed during the Prerequisites setup.

To set the Java SDK version in IntelliJ® to the required version:

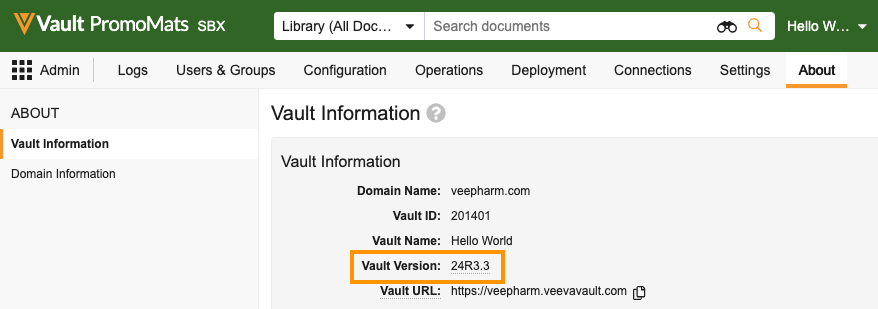

The Java SDK library version must match the Vault version. You can update the version by editing the <vault.sdk.version> attribute in your POM file.

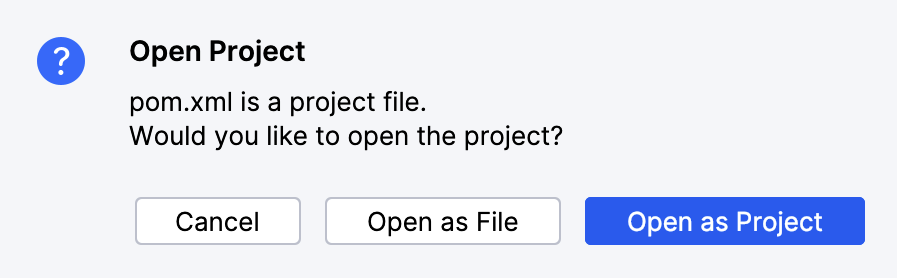

pom.xml file.Update the <vault.sdk.version> to your Vault version, using only periods (.) and not the letter R. For example, a Vault on version 24R3.3 should look like this:

<properties>

<vault.sdk.version>[24.3.3-release0, 24.3.3-release1000000]</vault.sdk.version>

</properties>

If prompted, select Import Changes. You can also Enable Auto-Import to instruct Maven to automatically import any future changes.  In newer versions of IntelliJ®, you may need to import changes manually by right-clicking the

In newer versions of IntelliJ®, you may need to import changes manually by right-clicking the pom.xml file and selecting Maven > Reload project.

In the External Libraries section of IntelliJ®, verify the Maven: com.veeva.vault.sdk.api:vault-sdk-api library shows your Vault version. If it does, your development setup is complete!

In this step, you will:

With the development environment set up, you can now modify the HelloWorld trigger in your IDE.

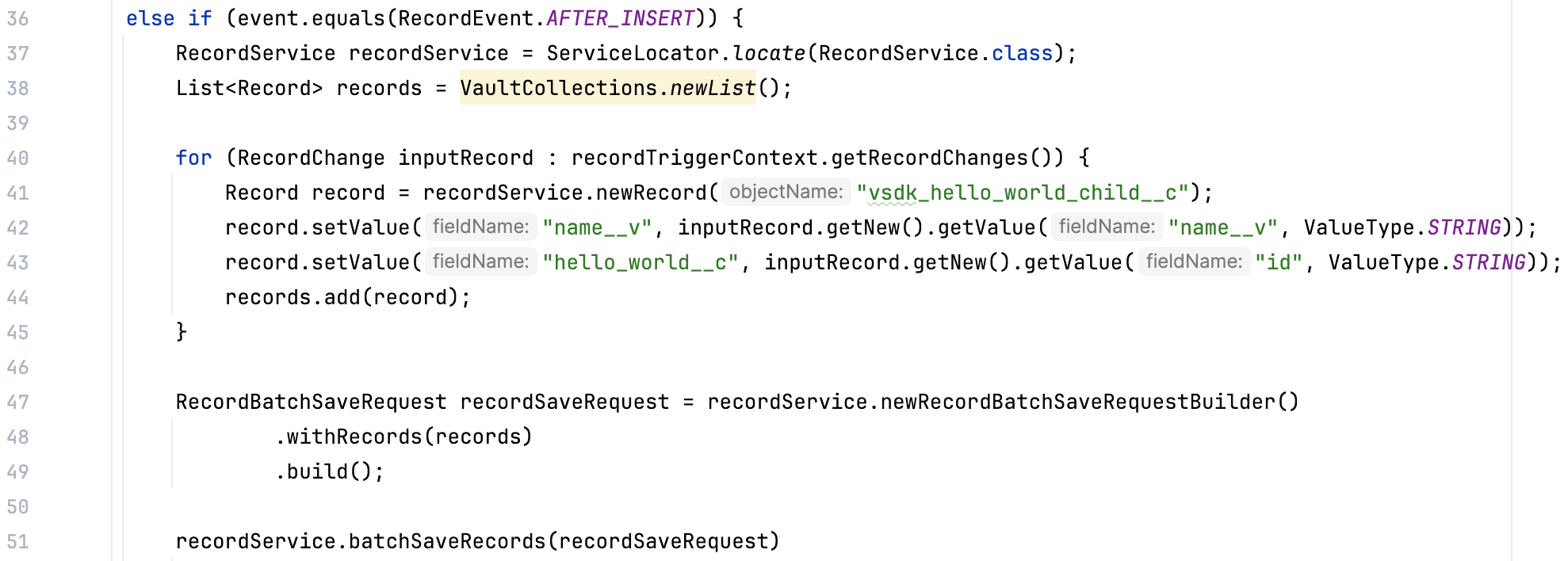

Right now, the HelloWorld trigger has custom logic on the BEFORE_INSERT event. In this step, you will add new custom code to trigger on the AFTER_INSERT event. We’ve already written most of this code for you, so you can focus on the structural updates needed to add code for a new event on an existing trigger.

Unlike the BEFORE_INSERT event which triggers right before the record saves, the AFTER_INSERT event triggers immediately after the record saves. When triggered, this new custom code creates a vsdk_hello_world_child__c record.

HelloWorld Java file, which is located in \src\main\java\com\veeva\vault\custom\triggers./*) on lines 35 and 74. This will uncomment lines 35 through 51, which is code for custom logic on the AFTER_INSERT event.

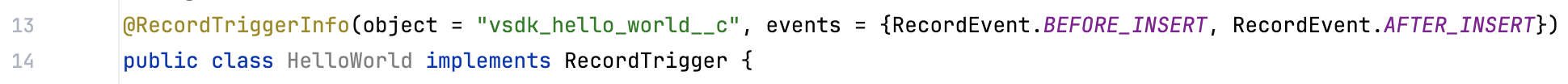

AFTER_INSERT code will run, you need to add this event to the record trigger annotation. You can do this by adding the event to the @RecordTriggerInfo annotation. In line 13, add RecordEvent.AFTER_INSERT to the event parameter in the annotation:

You’ve just written your first piece of SDK code, custom logic for an AFTER_INSERT event! In the next step, you will deploy these changes to your Vault.

@RecordTriggerInfo annotation and how this works together to build the anatomy of a record triggerBEFORE_INSERT orAFTER_INSERT eventIn this step, you will:

If you’re using IntelliJ®, Maven is already bundled with your installation. If you’re using another IDE, you may need to manually install Maven.

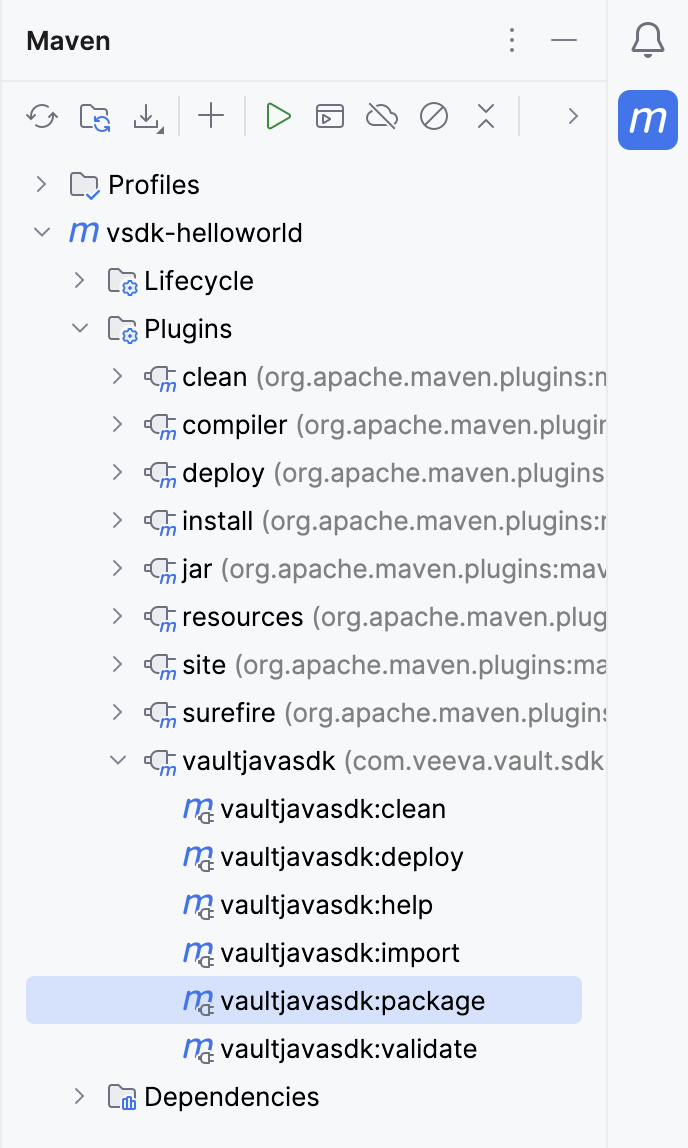

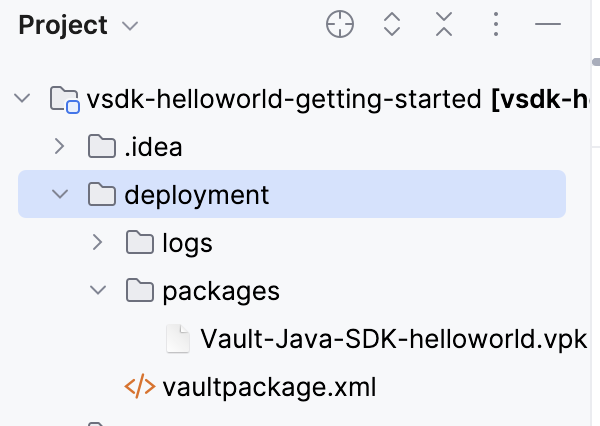

vsdk-helloworld-getting-started\src\main\resources and open plugin_settings_file.json.firstname.lastname@example.com with your Vault user name.vsdk-helloworld-getting-started\src\main\resources and open vapil_settings_file.json.vaultUsername, vaultPassword, and vaultDNS values with your login information.vaultjavasdk:package Maven goal. This creates the .vpk file which contains your code changes.

vaultpackage.xml file and a .vpk file.

vaultjavasdk:deploy Maven goal from the Maven sidebar menu to deploy the .vpk to Vault. Deploying may take a minute. You will receive an email when Vault completes the deployment.Now that we’ve deployed the new trigger code for the AFTER_INSERT event, let’s run it again and make sure that the changes work as expected.

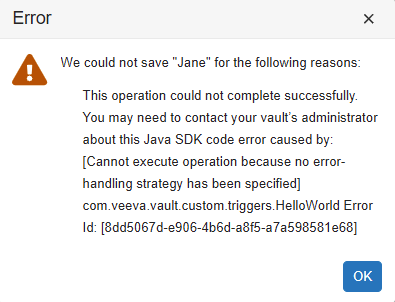

AFTER_INSERT event and we’re getting an error message!

When saving records using Vault Java SDK, you must specify an error handling strategy. Because the AFTER_INSERT event is saving the record, writing code for this event requires an error handling strategy. You can learn more about error-handling and how to fix this error in the next tutorial: Using the Vault Java SDK Debugger.

Congratulations, you’ve finished the Getting Started!

To recap what we’ve learned, you now know how to:

In this tutorial, you will:

This tutorial builds from the Getting Started: Hello World! tutorial. In this tutorial, you will use the Vault Java SDK Debugger to fix the error generated in the getting started.

If you only need a quick-reference to connect to the debugger, you can skip to Debugger Setup.

This tutorial builds from the Getting Started: Hello World! tutorial. In this tutorial, you will use the Vault Java SDK Debugger to fix the error generated in the getting started.

If you only need a quick-reference to connect to the debugger, you can skip to Debugger Setup.

In this tutorial, you will:

In the Getting Started, the deployed code produced an error:

To fix this error, you must add an error-handling strategy. While error handling strategies are recommended for all SDK code, error-handling is required when saving records in Vault Java SDK. The current code in the AFTER_INSERT event creates a new record, which triggers a record save.

Our error-handling strategy will cover the following:

.onSuccesses), write a message to the Debug or Runtime logs.onErrors), throw a RollbackException and roll back the transactionWe’ve already written this code for you in the Hello World project, so all you need to do is remove the code comments.

To add the error-handing strategy to our existing trigger:

In this step, you will:

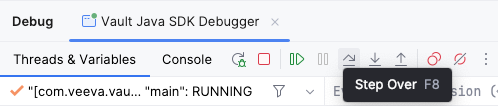

Before deploying the code changes, you may want to test it first through the Debugger. Once connected to your Vault, the Debugger allows you to run code locally on your machine without enabling the code for all users in your Vault. This allows you to test and troubleshoot code locally before deploying finished code.

You must have the standard Vault Owner security profile. Learn more in related permissions.

To set up the debugger:

Main Class: com.veeva.vault.sdk.debugger.SdkDebugger. The SDK Debugger Main Class should auto-complete as you begin to type. If it does not autocomplete, or if your IDE cannot recognize this Main Class, you may have completed a previous step incorrectly.

Program Arguments:

-h {host name}: The host name of your Vault. For example, myvault.veevavault.com. You should not include https://.-u {user name}: Your Vault user name, which you use to log in.-p {password}: Your Vault password.-s {sessionId}: You can choose to authenticate using other tools, such as a browser or PostmanTM, and provide an authenticated sessionId in this switch instead of providing -u and -p.-?: Display debugger help and exit.If your connection to the debugger is successful, you will see a console message stating Welcome to the Vault Java SDK Debugger and additional information such as your user, host, and debugger version.

You may encounter the following errors when running the debugger:

Your Vault Java SDK library version does not match the Vault version [XX.X.X]. Update your Java SDK library version to match the vault.: Update the <vault.sdk.version> in your pom.xml file to match the Vault version. If the error persists after verifying you’ve completed the steps correctly, you may need to reference our more extensive POM Setup section.Your debugger Java version does not match the vault Java version [17]: Set the IntelliJ® Java SDK version to 17.A Request Profiler session is already in progress for this vault: You must wait for the profiler session to finish before you can attach the debugger. Learn more about the SDK Request Profiler.Instead of running the code, you can place breakpoints and debug the Vault extension class line by line.

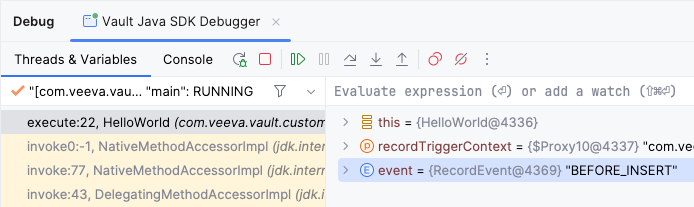

event variable is assigned a value of BEFORE_INSERT. The code will now step through the BEFORE_INSERT section of code logic.

event variable is now assigned a value of AFTER_INSERT. The code will now step through the AFTER_INSERT section of code logic.Now that you’ve debugged the code locally and confirmed that the trigger is working as expected, you need to deploy the code to Vault so that the custom code executes for all users. Then, you will run the code one more time in Vault to verify that the deployment succeeded and your code changes work as expected.

To deploy code to Vault with the Maven plugin:

vaultjavasdk:package Maven goal. The deployment folder will be updated with a new .vpk file with your code changes.vaultjavasdk:deploy Maven goal from the Maven sidebar menu to deploy the code to Vault. You will receive an email when Vault completes the deployment.To run our newly deployed trigger code in Vault:

Congratulations, you’ve completed the Vault Java SDK Debugger Tutorial!

To develop code, you need to have a Maven project. You need to make sure your POM file is set up correctly, and your src folder is under the javasdk folder.

The artifacts (.jars) for the Vault Java SDK are distributed by a Maven Repository Manager. This allows you to easily download the Vault Java SDK and all its dependent libraries by simply setting up a Maven project pointing to the Maven Repo Manager in the pom.xml file.

This file has three sections you may need to edit:

<properties><repositories><dependencies>The <vault.sdk.version> in your POM file must match the version of the Vault you are developing on. Note that when developing on limited release Vaults, the Vault Java SDK feature set is Beta and subject to change.

When Vault is upgraded to a new release or if you’re switching between Vaults during development, the <vault.sdk.version> element in the properties section must be updated accordingly to reimport the correct version of the Vault Java SDK from the repository.

You can find your Vault version in Admin > Settings > General Settings. You don’t need to worry about your Vault’s build number.

The <vault.sdk.version> must be in the following format:

[{vault_version}-release0, {vault_version}-release1000000]

{vault_version}: Your Vault version, using only periods (.) and not the letter R.For example, a Vault on version 18R3.0 should look like this:

<properties>

<vault.sdk.version>[18.3.0-release0, 18.3.0-release1000000]</vault.sdk.version>

</properties>

Your <repositories> section should look like this:

<repositories>

<repository>

<id>veevavault</id>

<url>https://repo.veevavault.com/maven</url>

<releases>

<enabled>true</enabled>

<updatePolicy>always</updatePolicy>

</releases>

</repository>

</repositories>

This dependency will pull the Vault Java SDK and all the libraries it depends on from the repository, and allows you to connect to the debugger.

Your <dependencies> section should look like this:

<dependencies>

<dependency>

<groupId>com.veeva.vault.sdk</groupId>

<artifactId>vault-sdk</artifactId>

<version>${vault.sdk.version}</version>

</dependency>

<dependency>

<groupId>com.veeva.vault.sdk.debugger</groupId>

<artifactId>vault-sdk-debugger</artifactId>

<version>${vault.sdk.version}</version>

</dependency>

</dependencies>

To use the QualityOne SDK, you must add an additional dependency:

<dependency>

<groupId>com.veeva.vault.app.qualityone.publicapi</groupId>

<artifactId>qualityone-publicapi</artifactId>

<version>${vault.sdk.version}</version>

</dependency>

Developing Vault Extensions means writing your own implementation of specific Vault extension interfaces, such as RecordTrigger or RecordAction. For example, a record trigger must implement the RecordTrigger interface and annotate the class with the @RecordTriggerInfo to provide deployment information.

The following is a skeleton code example of a trigger class implementation:

package com.veeva.vault.custom.triggers;

import com.veeva.vault.sdk.api.data.RecordTriggerInfo;

import com.veeva.vault.sdk.api.data.RecordTrigger;

import com.veeva.vault.sdk.api.data.RecordEvent;

import com.veeva.vault.sdk.api.data.RecordTriggerContext;

import com.veeva.vault.sdk.api.data.RecordChange;

@RecordTriggerInfo(object = "object_name__c", events = {RecordEvent.BEFORE_INSERT})

public class ObjectTrigger implements RecordTrigger {

public void execute(RecordTriggerContext recordTriggerContext) {

// process each input record.

for (RecordChange recordChange : recordTriggerContext.getRecordChanges()) {

}

}

}

Generally, a Vault extension’s implementation uses services provided by the Vault Java SDK. With these services, you can apply custom business logic such as retrieving and performing data operations according to business requirements.

Learn more about services.

While developing Vault extensions is essentially programming in Java, there are some language and JDK restrictions to ensure your code runs securely in Vault.

You should observe the following general guidelines when developing Vault extensions:

RollbackException for exception handlingRestrictions are checked during validation, which happens when you deploy code to Vault from a VPK. For example, if your code uses a third-party library or non-allowlisted class, it will not pass validation and deployment will fail. We recommend validating your code often during the development process to catch issues early.

You can do this with the Validate Package endpoint.

POST /api/{version}/services/package/actions/validate

To use this endpoint, you must create a Vault Package File (VPK) as input.

You can view, download, delete, enable or disable deployed extensions in the Admin UI, located in Admin > Configuration > VAULT JAVA SDK. Learn more about the Admin UI in Vault Help.

When testing Vault code locally through the debugger, the code is only active locally while the debugger is running. To make the code run for all users in your Vault, you must deploy it.

To deploy code, a Vault Admin must enable configuration packages in your Vault. Learn how to enable configuration packages in Vault Help.

Deploy code in three steps:

With this deploy method, you can accomplish any of the following:

If you need other deploy options, such as deploying or deleting a single file in the target Vault, see Managing Deployed Code. However, deploying a single file rather than a VPK is considered bad practice and should be used sparingly.

Note that your Vault user must have the correct permissions to deploy code. See the related permissions table for more information.

You can also create, validate, import, and deploy a VPK using the Vault Java SDK Maven Plugin.

Before you can create your VPK, you must verify that your source code is in the proper file structure and prepare a valid vaultpackage.xml manifest file. This manifest file tells Vault whether you’re adding, replacing, or removing code.

Create a VPK by zipping your javasdk folder and the vaultpackage.xml manifest file and renaming it with the .vpk extension. For more detailed instructions, see Getting Started.

Your file structure must adhere to the following guidelines:

javasdk folder in a maven project directory hierarchy as follows: src.main.java.com.veeva.vault.customcustom. For example, custom.triggers or custom.actions..java source files or 50MB of data in a single deploy. If you have more than 1,000 source files or a VPK larger than 50MB, the entire deploy will fail.Your manifest file must be named vaultpackage.xml and must be located in the root of your file structure.

Example vaultpackage.xml:

<vaultpackage xmlns="https://veevavault.com/">

<name>PKG-DEPLOY</name>

<source>

<vault></vault>

<author>mmurray@veepharm.com</author>

</source>

<summary>PromoMats RecordTrigger</summary>

<description>Record trigger on the Product object for PromoMats.</description>

<javasdk>

<deployment_option>incremental</deployment_option>

</javasdk>

</vaultpackage>

All of the following attributes must appear in the manifest file. Attributes marked as Optional must still be included, but can be left with a blank value.

| Attribute | Description |

|---|---|

<vaultpackage> | Top-level attribute to hold all other attributes. Must include xmlns="https://veevavault.com/". |

<name> | A name which identifies this package. |

<source> | A top-level attribute to hold the following sub-attributes:

|

<summary> | Provide more information about this package. Appears in the Summary section of Admin > Deployment > Inbound Packages. |

<javasdk> | A top-level attribute to hold the <deployment_option> sub-attribute. This tells Vault how to deploy your package in Vaut. Valid values are:

|

<description> | Optional: A description of your package. If omitted, the description will appear blank in Admin > Deployment > Inbound Packages. |

incremental: Add new or update existing code in the target Vault. This will never delete source files from the target Vault, but it may overwrite existing files.replace_all: Completely delete all existing source code in the target Vault and replace it with the code in this VPK. This may permanently delete source files from the target Vault.delete_all: Delete all of the existing source files in the target Vault. This setting only works if the VPK contains an empty javasdk folder, or if no such folder is included.After creating the VPK, you need to import this VPK to your Vault. This does not deploy the code, it just adds the VPK containing the code to your Vault. The following instructions import the VPK using Vault API, but you can also import through the Vault UI.

With the Import Package endpoint, import your code.

PUT /api/{version}/services/package

The body of your request must include the VPK created in the previous step.

On SUCCESS, the response contains an id for the vaultPackage. You will need this ID to deploy the package through the API.

After importing your VPK, you need to deploy it. This is the final step which makes your Vault extension run for all users. The following instructions deploy the VPK using Vault API, but you can also deploy through the Vault UI. We recommend using the UI, which has a multi-step wizard that ensures validation.

Deploy your package with the Deploy Package endpoint.

POST /api/{version}/vobject/vault_package__v/{package_id}/actions/deploy

You can find the package_id URI Path Parameter in the API response from your import request. If you lost this ID, you can also find it in Admin > Deployment > Inbound Packages.

When you run the deploy endpoint, Vault first validates the VPK. If you have any validation errors, such as using non-allowlisted classes, deployment will fail. To avoid this, we recommend validating your package frequently throughout the development process.

After successful deployment, you can view deployed extensions in the Admin UI, located in Admin > Configuration > VAULT JAVA SDK. Learn more about the Admin UI in Vault Help.

If the deployment encounters any errors, Vault stops the deployment but does not roll back any changes it already made. We recommend downloading and checking the log file for details. Learn more about deployment errors in Vault Help.

Deploying VPKs are not the only way to manage your custom code. You can view, download, delete, enable or disable deployed extensions in the Admin UI, located in Admin > Configuration > VAULT JAVA SDK. Learn more about the Admin UI in Vault Help.

You also may need more granular deploy options. For example, you may need to delete a single file rather than all files. However, we do not recommend using the following single-file deploy methods as you may introduce or delete code which breaks existing deployed code. As a best practice, you should always use VPKs to manage code deployment.

When deployed, extensions are automatically enabled. You may wish to disable an extension if you are troubleshooting a bug, or loading data into a Vault and do not want a trigger to execute. You can easily enable and disable extensions through the Admin UI, or you can use Vault API. Users must have the Admin: Configuration: Vault Java SDK: Create and Edit and permissions to enable or disable code.

PUT /api/{version}/code/{FQCN}/{enable || disable}

You can only enable and disable entry-point classes, such as triggers and actions. You cannot disable UDCs, or Vault extensions which reference other code components.

You can retrieve the source code for a single file through the Admin UI, or through Vault API. Users must have the Admin: Configuration: Vault Java SDK: Read permission to download source code.

GET /api/{version}/code/{FQCN}

You may need to add or replace a single file rather than a whole VPK. However, we do not recommend using the following single-file deploy method as you may introduce or delete code which breaks existing deployed code. As a best practice, you should always use VPKs to manage code deployment.

The following endpoint adds or replaces a single .java file in the currently authenticated Vault. If the given file does not already exist in the Vault, it is added. If the file does already exist, the file is updated.

PUT /api/{version}/code

Users must have the Admin: Configuration: Vault Java SDK: Create and Edit and permissions to use this endpoint.

In some cases, you may need to delete a single file rather than replace all or delete all files. However, we do not recommend using the following single-file deploy method as you may introduce or delete code which breaks existing deployed code. As a best practice, you should always use VPKs to manage code deployment.

Code deletion is permanent. There is no way to retrieve a deleted code file. Vault does not allow deletion of a file which is currently in-use.

You can delete a single source file through the Admin UI, or through Vault API.

DELETE /api/{version}/code

Users must have the Admin: Configuration: Vault Java SDK: Create and Edit and permission to delete code with this endpoint.

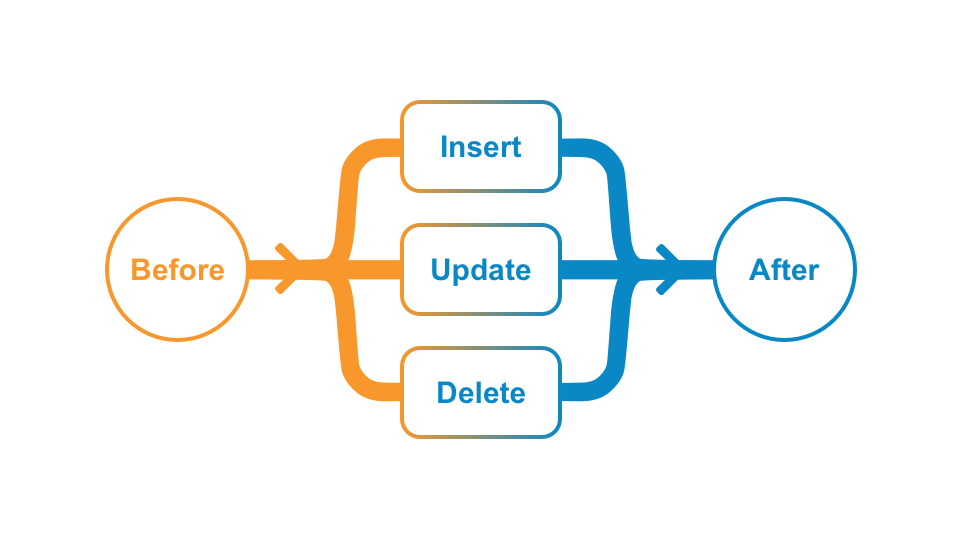

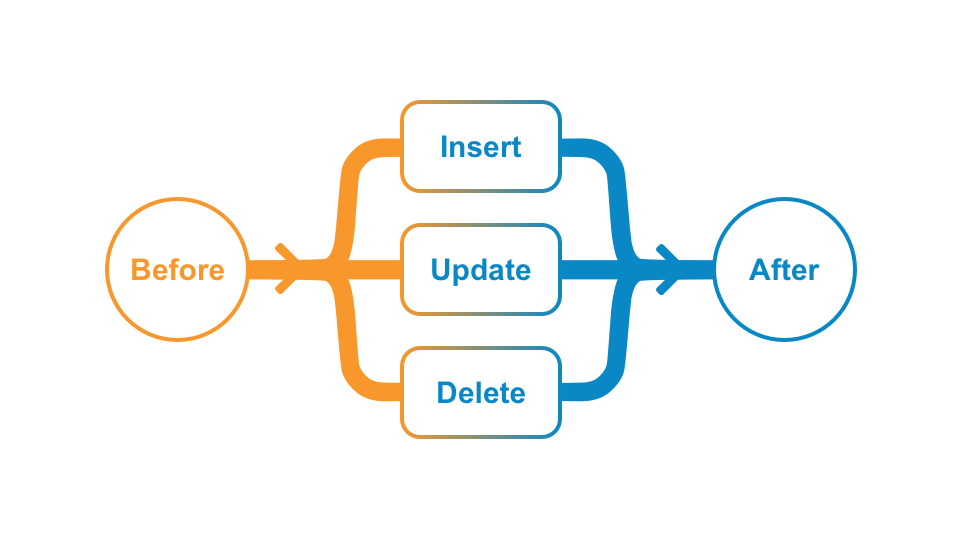

A record trigger executes custom business logic whenever a data operation makes changes to an object record. Users manipulate data in Veeva applications by using the UI or API to Insert, Update, and Delete records. When these operations occur, the Vault Java SDK provides interfaces to interact with the record data before and after the data operations. Using the Java SDK, users can apply custom business logic in Event handlers for BEFORE and AFTER Events.

This Event-driven programming model allows developers to write small programs that target a specific object and Event to address common business requirements which standard application configurations cannot address.

The following are some typical uses for triggers by Event type:

Field Value Defaults: Default field values before creating a record.

Field Value Validations: Validate field values before saving or deleting a record.

Conditionally Required Fields: Make a field required by canceling the save operation if some condition is not met.

Create, Update, or Delete Related Records: Create, update, or delete other records after saving or deleting a record.

Start Workflow: Start a workflow after creating or updating a record.

Change State: Change the lifecycle state of a record.

Let’s examine a typical Save new record operation initiated by a user and walk through what a trigger does. When the user clicks the Save button, the system captures the object, such as product__v, and Event, such as BEFORE_INSERT, which looks up a registry for triggers on the given object and Event and executes them in order. The system passes the data entered by the user to the trigger during trigger execution.

BEFORE_INSERT trigger logic can interact with the current record to:

QueryService to find related records by executing Vault Query Service (VQL) to return a set of related records.After saving the record, the system executes the AFTER_INSERT triggers for the same object.

AFTER_INSERT trigger logic can interact with the current record to:

RecordService to create related/child records (Create Related Records).JobService to start a workflow on the newly created record (Start Workflow).JobService to change the state of the current record (Change State).Since the current record cannot change in the AFTER_INSERT Event, most of the business logic in this Event interacts with other records through RecordService or performs jobs on the current record that are executed asynchronously in a separate process. Learn more about the order of operations for object field defaults in Vault Help.

When processing a request, the System performs the following sequence of steps:

BEFORE Action Triggers.BEFORE Record Triggers.AFTER Action Triggers.AFTER Record Triggers.The data available in BEFORE and AFTER Event triggers depends on the operations (INSERT, UPDATE, and DELETE). For example, in an INSERT operation, you cannot get old or existing values because a new record is being inserted. Similarly, setting a field value only makes sense in the BEFORE Event in INSERT and UPDATE operations. It doesn’t make sense to set field value after it has been persisted or in a DELETE operation. The following chart illustrates when you can get or set field values.

| Event | Record returned by getNew() | Record returned by getOld() | ||

|

| getValue | setValue | getValue | setValue |

| BEFORE_INSERT | X | X | ||

| AFTER_INSERT | X | |||

| BEFORE_UPDATE | X | X | X | |

| AFTER_UPDATE | X | X | ||

| BEFORE_DELETE | X | |||

| AFTER_DELETE | X | |||

As illustrated above, BEFORE triggers can change field values, but these values are not persisted to the database and not updated in the VQL index yet. In this case, using the QueryService to retrieve a record being modified by a trigger will only return the old (existing) values. In order to get the values set by a trigger inside a transaction, you must use the RecordService#readRecord method. However, this method generally uses more memory. It is only recommended when you need to get field values modified by multiple triggers in a single transaction. Otherwise, we recommend QueryService to retrieve record data.

Because AFTER triggers happen after database updates and VQL indexing, you can use QueryService to retrieve both old and new values.

Lookup Field and Document Reference Field (latest version) are special field types. These field types have values set by the System.

In general, the System populates field values after the BEFORE Event. Because these field values are set by the System, the changes are not reflected in the BEFORE Event. For example, getNew() and getOld() will return the same existing value or null accordingly. However, the AFTER Event will return the new value set by the System in getNew() and the existing value in getOld().

In addition, because System-initiated requests do not fire triggers, triggers will not fire when the System updates a System-populated field.

If your trigger updates a document reference field, you must set the Document Version Reference to Specific Version. Learn more in Vault Help.

You can implement record triggers as normal Java classes. You can express complex business logic within a trigger class.

The code sample below explains the anatomy of a typical, basic trigger class. This example simply defaults a field value based on another field when creating a new record.

The explanations and line numbers below refer to the code sample above.

A custom record trigger must be under the com.veeva.vault.custom package. You can have further sub-package names as you see fit to organize your triggers. For example, you might use com.veeva.vault.custom.rim.submissions.triggers to indicate custom triggers for a RIM Submissions project.

Only references to Vault Java SDK (com.veeva.vault.sdk.api.*) and a limited number of allowlisted classes, interfaces, and methods in the JDK are allowed. For example, String, LocalDate, List, etc.

The class annotation (@RecordTriggerInfo) indicates that this class is a record trigger. The annotation specifies the Object, Event(s), and Order of execution.

product__v. This specifies the object that the trigger code will execute on.{RecordEvent.BEFORE_INSERT, RecordEvent.BEFORE_UPDATE}. This specifies the Event(s) that the trigger code will execute on.The class name declaration must include the public modifier and implements RecordTrigger. As a best practice, class name should indicate the object affected by this trigger and some functional description, for example, ProductFieldDefaults implements RecordTrigger means a trigger on Product that defaults some field values.

You must implement this method for the RecordTrigger interface. This method has an instance of RecordTriggerContext passed in, so you can interact with the record(s) on which a user has initiated some operation.

When a user performs a record operation whether by UI or API, such as creating a new record, the record being created is the context record. The operation may have multiple context records such as in a Bulk Create.

A list of records affected by the operation can be retrieved from RecordTriggerContext#getRecordChanges, and you can loop through each record to get field values and/or set field values. Your business logic is enclosed in this loop.

You can retrieve field values from the context record. Trigger code operates as the System user, so no record level or field level security apply. All records and fields are accessible.

For new records, only new values are available. For updating records, both old and new values are available. The fieldName argument must be a valid field name in the object. For example, name__v. The fieldType argument must match the Vault field type in order to return the appropriate Java data type. Use the Data Type Map to find out how data types are mapped to objects in Vault.

You can set field value on fields that are editable. System fields, such as created_by__v and state__v, and Lookup fields are not editable.

The fieldName argument must be a valid field name in the object. The fieldValue argument must be an object of the appropriate data type for the field in the fieldName argument. Use the Data Type Map to find out how data types are mapped to objects in Vault.

A record role trigger executes custom business logic whenever roles are directly (manually) added or removed from an object record.

Users can manage manual role assignment in the UI with object sharing settings or from a workflow using the add or remove role system step, through Vault API, or using the Vault Java SDK RecordRoleService. Each of these methods can activate an SDK record role trigger.

When these operations occur, the Vault Java SDK provides interfaces to interact with the record data, and the record role change before and after the record role change. Using the Java SDK, users can apply custom business logic in event handlers for BEFORE and AFTER Events.

This event-driven programming model allows developers to write small programs that target a specific object and Event to address common business requirements that standard application configurations cannot address.

The following examples illustrate typical uses for record role triggers by Event type:

It is common practice to enforce validation rules on role assignment. The BEFORE Event allows the code to execute validation logic before the role assignments (or un-assignments) apply.

You can implement record role triggers as normal Java classes. You can express complex business logic within a trigger class.

The code sample below explains the anatomy of a typical, basic trigger class.

package com.veeva.vault.custom.triggers.case3;

A custom record role trigger must be under the com.veeva.vault.custom package. You can have further sub-package names as you see fit to organize your triggers.

import com.veeva.vault.sdk.api.core.RollbackException;

Only references to Vault Java SDK (com.veeva.vault.sdk.api.*) and a limited number of allowlisted classes, interfaces, and methods in the JDK are allowed. For example, String, LocalDate, List, etc.

@RecordRoleTriggerInfo(object="product__v", events={RecordRoleEvent.BEFORE, RecordRoleEvent.AFTER},

order=TriggerOrder.NUMBER_2)

The class annotation (@RecordRoleTriggerInfo) indicates that this class is a record trigger. The annotation specifies the Object, Event(s), and order of execution.

product__v. This specifies the object that the trigger code will execute on.{RecordRoleEvent.BEFORE, RecordRoleEvent.AFTER}. This specifies the Event(s) that the trigger code will execute on.public class CheckProductRole implements RecordRoleTrigger {

The class name declaration must include the public modifier and implements RecordRoleTrigger. As a best practice, class name should indicate the object affected by this trigger and some functional description, for example, CheckProductRole implements RecordRoleTrigger means a trigger on a Product that does validation on Role assignment.

public void execute(RecordRoleTriggerContext rroletc) {

String ROLE_TO_READ = "owner__v";

You must implement this method for the RecordRoleTrigger interface. This method has an instance of RecordRoleTriggerContext passed in, so you can interact with the role record(s) on which a user has initiated some operation.

// Get Record Role changes

RecordRoleEvent event = rroletc.getRecordRoleEvent();

Get the record role Event from the RecordRoleTriggerContext. This trigger executes on both BEFORE and AFTER events, checking the context allows the code to check in which Event the code is executed.

List<RecordRoleChange> rrchanges = rroletc.getRecordRoleChanges();

The RecordRoleChanges() method return a list of RecordRoleChange. A recordRoleChange captures all changes done on the record role:

// Get RecordRoleChange details

RecordRole rrole = rrchange.getRecordRole();

A recordRoleChange provides a method getRecordRole() returning the current recordRole that is being changed. In a BEFORE Event, the recordRole exposes the role assigned before the change. In an AFTER Event, the recordRole exposes the role assignments after the change.

The Vaut Java SDK supports record triggers on the user__sys object. Users triggers work the same way as other record triggers, with some exceptions.

Most record triggers execute whenever a data operation makes changes to an object record. Unlike most record triggers, user triggers execute only when changes are made directly to the user__sys object record in a Vault. In other words, user triggers are not invoked by indirect updates.

For example, triggers on the user__sys object are not invoked in the following scenarios:

Custom code in Vault executes as the Java SDK Service Account, which has Vault Owner level access. Vault extension code, such as triggers and actions, can access object records with full read/write permission. This means any Vault user level, record level, or field level access restrictions do not apply. Custom code can copy or move data from object to object and delete data without regards to who the user is. It’s the developer’s responsibility to take that current user context into consideration and apply control where appropriate.

Data security should be considered when designing solutions using the Vault Java SDK.

When a user initiates a request (INSERT, UPDATE, or DELETE) such as clicking Save in UI or sending a POST via Object API, the system processes the request by firing the BEFORE Event triggers first, then committing data to the database, and then firing the AFTER Event triggers.

BEFORE triggers are often used for defaulting field values and validating data entry, whereas AFTER Event triggers are mostly used to automate creating other records or starting workflow processes.

When a user initiates a record role request, the system processes the request by firing the BEFORE Event triggers first, then committing data to the database, and then firing the AFTER Event triggers. BEFORE triggers are often used for validating data entry, whereas AFTER Event triggers are mostly used to automate the assignment or unassignment of roles on related records or documents.

A limit of 10 triggers are allowed in each Event and the order of execution can be specified. That means BEFORE and AFTER Events each have their own limit of 10 triggers allowed. In addition, when any given trigger executes, it can cause other triggers (nested triggers) to fire when it either performs a role a assignment or a data operation (INSERT, UPDATE, DELETE) programmatically. The nested trigger depth cannot exceed 10 levels deep.

To summarize, when a user initiates a request (for example, INSERT), the BEFORE Event triggers (up to 10) will execute in order. If any of the triggers cause other triggers to fire, the nested triggers will execute (up to 10 nested levels). After the system finishes the BEFORE triggers, the data with any changes made by the executed triggers persists, and the AFTER Event triggers will fire in the same manner with trigger order and nested depth. The image below illustrates this execution flow.

If you need to share data between different triggers or actions in the same transaction, you can do so with RequestContext.

Generally, triggers fire when a user initiates a request. When the System updates records, such as Lookup Field updates, triggers do not fire. Similarly, when the System performs a Hierarchical Copy (deep copy), the insert operation will not fire any triggers.

The trigger execution flow described above represents a transaction. In some cases, it is necessary to cancel the entire INSERT request and rollback any changes. Developers can throw a RollbackException in any trigger in the transaction, and execution will terminate immediately and roll back all changes.

Note that calling RecordChange#setError or RecordRoleChange#setError will not terminate a transaction. Instead, the trigger which caused the error will fail and the rest of the transaction will continue. In order to terminate an entire transaction, you should always throw a RollbackException.

The system will also terminate execution and rollback a request when errors occur, such as missing required field value on INSERT or exceeding allowed elapsed time limit (100 seconds).

Calls to asynchronous services such as JobService or NotificationService will execute only when the request transaction completes. This way, you can use a RollbackException to stop the transaction if necessary, preventing asynchronous services from executing unintentionally when rolling back a transaction. For example, if a DELETE Event trigger calls NotificationService to send a notification, but a nested trigger later rolls back the transaction, the system should not delete the record nor send the notification. This prevents the asynchronous notification process from executing erroneously. Once the entire transaction completes successfully, all queued asynchronous services execute immediately.

Triggers should be designed to process records in bulk, especially when making service calls, such as QueryService, RecordService and RecordRoleService. These services are designed to take a list of records or record role changes as input for CRUD operations. It is much more efficient to build a list of record for input and make a single call to these services rather than make service calls one record at a time inside a loop.

Triggers that do not process records in bulk will perform poorly, especially when there are multiple triggers (including nested triggers), execution will likely exceed the maximum elapsed time (100s) or CPU time (10s) allowed. In addition, queries that return large number of records with large number of fields (including fields not used in your code) will likely exceed the maximum memory allowed (40MB).

Generally, you should never run a query or perform CRUD operations on records in a loop. Each iteration will make unnecessary service calls which can be easily batched to get the same result with a single service call.

The following poorly performing code executes a query inside a “for” loop, for each Product record in a request. That means when a request has multiple records, like from an API call or bulk update wizard, the QueryService#query call is made for each of the records. The only difference between each query is the WHERE clause contains a different Country reference field value. Performing multiple queries in this case is inefficient and time consuming. A better approach is to make a single query with a CONTAINS clause for each Country referenced by the Product records in the request.

To make performance even worse, as each query is executed to retrieve related records, a forEach loop is used to call RecordService.batchSaveRecords to save each new Country Brand record one at a time. Creating, updating, and deleting records are the most expensive and time-consuming operations. You should always batch records up in a list as input when calling batchSaveRecords.

While the better performing code requires more lines of code as illustrated below, it performs much better because it reduces data operations significantly by leveraging the Vault Java SDK’s interfaces to process records in bulk.

@RecordTriggerInfo(object = "product__v", events = RecordEvent.AFTER_INSERT)

public class ProductCreateRelatedCountryBrand implements RecordTrigger {

public void execute(RecordTriggerContext recordTriggerContext) {

for (RecordChange inputRecord : recordTriggerContext.getRecordChanges()) {

QueryService queryService = ServiceLocator.locate(QueryService.class);

String queryCountry = "select id, name__v from country__v where region__c=" + "'" + region + "'";

QueryResponse queryResponse = queryService.query(queryCountry);

queryResponse.streamResults().forEach(queryResult -> {

Record r = recordService.newRecord("country_brand__c");

r.setValue("name__v", internalName + " (" + queryResult.getValue("name__v", ValueType.STRING) + ")");

r.setValue("country__c",queryResult.getValue("id",ValueType.STRING));

r.setValue("product__c",productId);

RecordService recordService = ServiceLocator.locate(RecordService.class);

recordService.batchSaveRecords(VaultCollections.asList(r)).rollbackOnErrors().execute();

});

}

}

@RecordTriggerInfo(object = "product__v", name= "product_create_related_country_brand__c", events = RecordEvent.AFTER_INSERT)

public class ProductCreateRelatedCountryBrand implements RecordTrigger {

public void execute(RecordTriggerContext recordTriggerContext) {

// Get an instance of the Record service

RecordService recordService = ServiceLocator.locate(RecordService.class);

List<Record> recordList = VaultCollections.newList();

// Retrieve Regions from all Product input records

Set<String> regions = VaultCollections.newSet();

recordTriggerContext.getRecordChanges().stream().forEach(recordChange -> {

String regionId = recordChange.getNew().getValue("region__c", ValueType.STRING);

regions.add("'" + regionId + "'");

});

String regionsToQuery = String.join (",",regions);

// Query Country object to select countries for regions referenced by all Product input records

QueryService queryService = ServiceLocator.locate(QueryService.class);

String queryCountry = "select id, name__v, region__c " +

"from country__v where region__c contains (" + regionsToQuery + ")";

QueryResponse queryResponse = queryService.query(queryCountry);

// Build a Map of Regions (key) and Countries (value) from the query result

Map<String, List<QueryResult>> countriesInRegionMap = VaultCollections.newMap();

queryResponse.streamResults().forEach(queryResult -> {

String region = queryResult.getValue("region__c",ValueType.STRING);

if (countriesInRegionMap.containsKey(region)) {

List<QueryResult> countries = countriesInRegionMap.get(region);

countries.add(queryResult);

countriesInRegionMap.put(region,countries);

} else

countriesInRegionMap.putIfAbsent(region,VaultCollections.asList(queryResult));

});

// Go through each Product record, look up countries for the region assigned to the Product,

// and create new Country Brand records for each country.

for (RecordChange inputRecord : recordTriggerContext.getRecordChanges()) {

String regionId = inputRecord.getNew().getValue("region__c", ValueType.STRING);

String internalName = inputRecord.getNew().getValue("internal_name__c", ValueType.STRING);

String productId = inputRecord.getNew().getValue("id", ValueType.STRING);

Iterator<QueryResult> countries = countriesInRegionMap.get(regionId).iterator();

while (countries.hasNext()){

QueryResult country =countries.next();

Record r = recordService.newRecord("country_brand__c");

r.setValue("name__v", internalName + " (" + country.getValue("name__v", ValueType.STRING) + ")");

r.setValue("country__c", country.getValue("id", ValueType.STRING));

r.setValue("product__c", productId);

recordList.add(r);

}

}

// Save the new Country Brand records in bulk. Rollback the entire transaction when encountering errors.

recordService.batchSaveRecords(recordList).rollbackOnErrors().execute();

}

}

Through the Vault Java SDK, you can create custom actions. These actions execute through the UI or API when invoked by a user. Unlike triggers, uploading action code does not make it execute. Action code requires an additional step from developers or Vault Admins to configure an action in Vault to use the uploaded code. Learn more about custom actions in the Javadocs.

Custom actions for records, called record actions, are invoked by a user on one or more specific records from the UI or API. You can configure custom record actions as any of the following:

User Action: Custom actions for records, called record actions or record user actions, are invoked by a user on a specific record from the UI or API. Learn more about Object User Actions in Vault Help. Note that if your object has types, you may wish to add the action to these object types. Learn more about assigning user actions to object types in Vault Help.

User Bulk Action: Bulk actions allow users to make changes to up to 1,000 object records at once from the Vault UI. Bulk actions cannot be annotated as any other type of action. If a user updates more than 500 records with a custom bulk action, Vault splits the update into two separate SDK transactions: one containing the first 500 records and a second containing the remainder. If one transaction throws a RollbackException, it does not rollback the other transaction. Learn more about bulk object record actions in Vault Help.

Lifecycle User Action: Actions invoked by a user on a specific object lifecycle state, from the UI or API. You can configure these actions in the Object Lifecycle configuration. Learn more about Object Lifecycle User Actions in Vault Help.

Entry Action: Actions automatically invoked when an object record enters a particular lifecycle state. You can configure these actions in the Object Lifecycle configuration. Learn more about Object Record Entry Actions in Vault Help.

Event Action: Actions automatically invoked when a user creates an object record. You can configure these actions in the Object Lifecycle configuration. Learn more about Object Record Event Actions in Vault Help.

System Action Step: Actions automatically invoked when an object record enters a particular workflow step. You can configure these actions during workflow configuration. Learn more about configuring system steps on object workflows in Vault Help.

Workflow Cancellation: Actions automatically invoked when a workflow is cancelled. You can configure these actions during workflow configuration. Learn more about cancellation actions in Vault Help.

In order to implement a custom action, the RecordAction interface requires implementing the following two methods:

isExecutable(): Optional: Return true to make the configured action visible and executable, or false to make the configured action hidden and unexecutable. This method is often used to check the current record field values, Admin configured parameter values, and user’s group/role membership in order to return true or false. Defaults to true if the method is not explicitly implemented.execute(): Add your action logic in this method. Here you can make updates to the current record, create new records, or use any of the Vault Java SDK services to interact with other records or documents. As a best practice, you should create a user-defined service containing action logic, which you can then invoke in one or more record actions.The @RecordActionInfo class annotation is also required to indicate this class is an action.

label: Label of this action. This is the label which appears for Vault Admins during action configuration, and is different from the label provided to end-users who run this action.object: If specified, the action is available for the specified object only. If omitted, the action is available across all objects.usages: If specified, the action is available for configuring in the specified usages only. For example, USER_ACTION. If omitted, defaults to UNSPECIFIED which means the action is available everywhere actions are supported with the exception of bulk actions. If Vault adds a new record action usage in future releases, code with UNSPECIFIED usage immediately becomes available for the new usage.user_input_object_type: If your action takes a user input object, you may want to identify the object type using the object type name, for example, base__v. If omitted, it means this action does not require a type for user input. Actions which require user input may not require a type.user_input_object: If your action takes a user input, object identify the object by its name, for example, product__v. If omitted, it means this action does not require user input.icon: The icon which appears in the Vault UI Action Bar for this action. Learn more about action icons.If your action updates a document reference field, you must set the Document Version Reference to Specific Version. Learn more in Vault Help.

Optionally, a dialog can be shown in the UI before and after the action execution for a class that implements the RecordAction interface.

onPreExecute: This method allows a confirmation dialog to be displayed to the user prior to the execution of a record action. A custom dialog title and message can be added.

onPostExecute: This method allows a post record action execution banner to be displayed to the user. A custom banner message can be added.

Pre- and post-action dialogs execute as separate transactions. Learn more in the Javadocs.

The following is a basic skeleton of a record action:

package com.veeva.vault.custom.actions;

@RecordActionInfo(label="Say Hello", object="hello_world__c", icon="create__sys", usages={Usage.USER_ACTION, Usage.WORKFLOW_STEP})

public class Hello implements RecordAction {

// This action is available for configuration in Vault Admin.

public boolean isExecutable(RecordActionContext context) {

return true;

}

public void execute(RecordActionContext context) {

//action logic goes here

}

}

Record workflow actions are custom actions which execute on an object or document workflow. Document workflows are a type of object workflow which are configured on the envelope__sys object.

If you are unfamiliar with object or document workflows, you should learn more before coding a record workflow action:

You can configure a custom action for object workflows on any of the following steps:

WorkflowEvent Enum in the Javadocs.Once configured on a workflow step, the record workflow action is automatically invoked on all events for that workflow step during workflow execution.

You cannot start a workflow, create tasks, and cancel the new tasks in the same transaction. For example, you cannot start a workflow and then cancel all tasks in a TASK_AFTER_CREATE event.

A record workflow action is a Java class that implements the RecordWorkflowAction interface and has the @RecordWorkflowActionInfo annotation.

The RecordWorkflowAction interface must implement the execute() method. This is where you place the logic for your custom action. You can use any of the available Java SDK services to create logic for your action. For example:

WorkflowInstanceService: Update workflow participantsWorkflowTaskService: Cancel workflow tasksNotificationService: Send notifications to workflow participantsRecordService: Create related recordsJobService: Begin a related workflow. Note that you can only start workflows where the Participants control set to Use roles as participants.Unlike record actions or document actions, the RecordWorkflowAction interface does not include an isExecutable() method. This means all record workflow actions are available for configuration once deployed. If you want to enable or disable a deployed record workflow action, you can do so in the UI or through Vault API.

The @RecordWorkflowActionInfo annotation has the following elements:

label: Label of the action. This is the label which appears for Vault Admins when configuring this action.object: If specified, the action is only available for object workflows associated to the specified object. To make this action available for document workflows, set this to the envelope__sys object. If omitted, the action is available across all object and document workflows.stepTypes: The workflow step types that this action can be configured against.The following is a basic skeleton of a record workflow action:

package com.veeva.vault.custom.actions;

@RecordWorkflowActionInfo(label="Custom Approver", stepTypes={WorkflowStepType.START})

public class CustomApprover implements RecordWorkflowAction {

public void execute(RecordWorkflowActionContext context) {

//action logic goes here

}

}

Along with the standard document actions you can configure in the Vault UI, you can create custom document actions using the Vault Java SDK to automate more specific business processes. Unlike document actions created through the Vault UI, custom document actions can run multiple sequential actions within one action, and can execute more complex conditional logic.

You can configure the following types of custom document actions:

You can find examples of document actions in our Sample Code.

A document action is a Java class that implements the DocumentAction interface and has the @DocumentActionInfo annotation.

The DocumentAction interface requires implementing the following two methods:

isExecutable(): Optional: Return true to make the configured action visible and executable. For example, you could check the current document field values or a user’s role membership in order to determine if this method should return true or false. Defaults to true if this method is not explicitly implemented.execute(): Add your action logic in this method. Here you can make updates to the current document or use any of the Vault Java SDK services to interact with other documents or records.The @DocumentActionInfo class annotation requires the following:

label: Label of the action. This label appears for Vault Admins during action configuration. This is not the label users see when running the action.lifecycle: Specifies for which lifecycle the action is available. If omitted, the action is available across all document lifecycles.usage: Specifies for which usages the action is available for configuring. For example, USER_ACTION. If omitted, defaults to UNSPECIFIED, which means the action is available everywhere actions are supported with the exception of bulk actions. If Vault adds new usages for record actions in future releases, such as WORKFLOW_STEP, actions with UNSPECIFIED usage immediately become available for the new usage.user_input_object_type: If your action takes user input, you may want to identify the user input object type. If omitted, this action does not require a type for user input. Actions which require user input may not require a type.user_input_object: If your action takes user input, identify the user input object. If omitted, this action does not require user input.icon: The icon which appears in the Vault UI Action Bar for this action. Learn more about action icons.Optionally, a dialog can be shown in the UI before and after the action execution for a class that implements the DocumentAction interface.

onPreExecute: This method allows a confirmation dialog to be displayed to the user prior to the execution of a document action. A custom dialog title and message can be added. *onPostExecute: This method allows a post document action execution banner to be displayed to the user. A custom banner message can be added.Learn more about pre- and post-action dialogs in the Javadocs.

The following is a basic skeleton of a document action:

package com.veeva.vault.custom.actions;

@DocumentActionInfo(label="Set Expiration", usage="LIFECYCLE_ENTRY_ACTION", icon="update__sys")

public class SetDocumentExpiration implements DocumentAction {

// This action is available for configuration in Vault Admin.

public boolean isExecutable(DocumentActionContext context) {

return true;

}

public void execute(DocumentActionContext context) {

//action logic goes here

}

}

Choose which icon users see in the Action Bar for custom record and document actions by using the icon element of a RecordActionInfo or DocumentActionInfo annotation. If no icon is specified, the default value is "", meaning no icon is displayed and the action is not eligible to appear in the most frequently used section of the Action Bar. You can choose any of the following icons:

| Label | Value | Icon |

|---|---|---|

| Create | create__sys | |

| Update | update__sys | |

| Delete | delete__sys | |

| Save | save__sys | |

| Remove | remove__sys | |

| Send | send__sys | |

| Import | import__sys | |

| Export | export__sys | |

| Generate | generate__sys | |

| Sync | sync__sys |

To debug action code, developers must deploy the code to Vault and configure a usage for the action. When the configured action is invoked through Vault, execution passes to the debugger to allow developers to step through the code. The code in your debugger will override any deployed code, allowing developers to test changes to a deployed action. Note that the class you wish to develop and debug must have the same package, class name, and annotation as the deployed code.

While entry point interfaces like actions, triggers, and jobs define how and when Vault executes custom logic, services interfaces provide getter and setter methods that allow entry point implementations to interact with operations and data in Vault. For example, a RecordTrigger implementation might useJobService to start a workflow on a record, then use RecordService to update field values on the record. You can even create your own user-defined services.

Services are available to all entry point implementations.

To use a Vault service, you’ll first need to create an instance of that service using ServiceLocator.locate. The example below creates a new instance of JobService called jobService.

JobService jobService = ServiceLocator.locate(JobService.class);

Documents and object records are the most common types of data you’ll want to interact with. DocumentService and RecordService provide methods to create, update, and delete documents, document versions, and object records.

These services also allow creating, updating, and deleting attachments.

RecordService also allows setting record migration mode for a specific transaction. When migration mode is on, Vault bypasses entry criteria, entry actions, validation rules, event actions, and reference constraints when creating or updating records. While record migration mode is on, you cannot change an object’s type in the same transaction.

The trigger in the example below executes after Vault creates a vsdk_service_basics__c object record and uses RecordService to create two related records.

@RecordTriggerInfo(object = "vsdk_service_basics__c", events = {RecordEvent.AFTER_INSERT})

public class vSDKRecordService implements RecordTrigger {

public void execute(RecordTriggerContext recordTriggerContext) {

RecordEvent recordEvent = recordTriggerContext.getRecordEvent();

RecordService recordService = ServiceLocator.locate(RecordService.class);

List<Record> recordList = VaultCollections.newList();

RecordBatchSaveRequest.Builder recordSaveRequestBuilder = recordService.newRecordBatchSaveRequestBuilder();

if (recordEvent.toString().equals("AFTER_INSERT")) {

for (RecordChange inputRecord : recordTriggerContext.getRecordChanges()) {

String name = inputRecord.getNew().getValue("name__v", ValueType.STRING);

String id = inputRecord.getNew().getValue("id", ValueType.STRING);

String relatedTo = inputRecord.getNew().getValue("related_to__c", ValueType.STRING);

// Break out of the trigger code if the new record has a related "vsdk_service_basics__c" record.

// This indicates that the records are "Copy of" records from "vSDKQueryService.java"

// and do not need processing.

if (relatedTo == "" || relatedTo == null) {

// Creates two related records by creating a new record via the RecordService.

// The name of records is set as "Related to: <name> x"

// The relation to the parent to then set with the "related_to__c" object reference field.

for (int i = 1; i <= 2; i++) {

Record r = recordService.newRecord("vsdk_service_basics__c");

r.setValue("name__v", "Related to: '" + name + "' " + i);

r.setValue("related_to__c", id);

recordList.add(r);

}

}

}

recordSaveRequestBuilder.withRecords(recordList);

RecordBatchSaveRequest saveRequest = recordSaveRequestBuilder.build();

// If there are records to insert, the batchSaveRecords takes a RecordBatchSaveRequest as input.

// This request should contain every new record that you are adding or updating.

if (recordList.size() > 0) {

recordService.batchSaveRecords(saveRequest)

.onErrors(batchOperationErrors -> {

// Iterate over the caught errors.

// The RecordBatchOperation.onErrors() returns a list of BatchOperationErrors.

// The list can then be traversed to retrieve a single BatchOperationError and

// then extract an **ErrorResult** with BatchOperationError.getError().

batchOperationErrors.stream().findFirst().ifPresent(error -> {

String errMsg = error.getError().getMessage();

int errPosition = error.getInputPosition();

String name = recordList.get(errPosition).getValue("name__v", ValueType.STRING);

throw new RollbackException("OPERATION_NOT_ALLOWED", "Unable to create '" +

recordList.get(errPosition).getObjectName() + "' record: '" +

name + "' because of '" + errMsg + "'.");

});

})

.execute();

}

}

}

}

When retrieving values for formula fields, Vault returns the value of the field after running the formula expression, not the expression itself.

Vault does not update object records or documents when a user or the Vault system saves without making any changes. This means that the Last Modified Date is not changed, record triggers do not execute, and Vault does not create an event in the object record or document audit history.

The services in the lifecycle package provide methods that allow custom code to interact with object and document lifecycles.

ObjectLifecycleMetadataService: Provides getter methods to retrieve metadata for object lifecycles. For example, you can retrieve all lifecycle metadata fields for a single object record, or retrieve a collection of all object lifecycles available in a Vault.ObjectLifecycleStateUserActionMetadataService: Provides methods to retrieve metadata about an object lifecycle user action.ObjectLifecycleStateUserActionService: Provides methods to execute an object lifecycle user action.The Vault Java SDK supports initiating object lifecycle user actions, which are available to users on an object record in a specific lifecycle state. User actions can begin a workflow, move the record into a new lifecycle state, etc. Learn more about object lifecycle user actions in Vault Help.

The ObjectLifecycleStateUserActionMetadataService provides methods to retrieve configuration metadata about user actions, while ObjectLifecycleStateUserActionService provides methods to execute those actions.

The following example illustrates how to retrieve configuration metadata about available lifecycle user actions.

ObjectLifecycleStateUserActionMetadataService service = ServiceLocator.locate(ObjectLifecycleStateUserActionMetadataService.class);

LogService logService = ServiceLocator.locate(LogService.class);

// Retrieve metadata about user actions on a particular lifecycle state

// Build the metadata request

ObjectLifecycleUserActionMetadataRequest request = service.newLifecycleUserActionMetadataRequestBuilder()

.withLifecycleName(lifecycleName)

.withLifecycleState(lifecycleState)

.withActionType("LIFECYCLE_RUN_WORKFLOW_ACTION") // Optional: filter the type of the user actions to be returned

.build();

ObjectLifecycleUserActionCollectionResponse response = service.getLifecycleUserActions(request);

List<ObjectLifecycleUserActionMetadata> userActionMetadata = response.getUserActions();

for (ObjectLifecycleUserActionMetadata data : userActionMetadata) {

logService.info("User action metadata: label {}, component name {}, user action name {}," +

"type {}, workflow name {}, record action class name {}, fully qualified name {}",

data.getLabel(), data.getName(), data.getUserActionName(), data.getType(),

data.getWorkflowName(), data.getRecordActionClassName(), data.getFullyQualifiedActionName());

}

// Retrieve metadata about user actions on an object record

// Build the metadata request

ObjectRecordLifecycleUserActionMetadataRequest request = service.newRecordLifecycleUserActionMetadataRequestBuilder()

.withObjectName("object__c")

.withRecordId("V6U000000001001")

.withLifecycleState("inactive_state__c") // Optional: set the lifecycle state for the record, otherwise use the state the record is currently on

.build();

ObjectRecordLifecycleUserActionCollectionResponse response = service.getRecordLifecycleUserActions(request);

List<ObjectRecordLifecycleUserActionDetail> details = response.getUserActions();

for (ObjectRecordLifecycleUserActionDetail detail : details) {

logService.info("User action is executable {}, is viewable {}", detail.isExecutable(), detail.isViewable());

ObjectLifecycleUserActionMetadata metadata = detail.getMetadata();

logService.info("User action metadata: label {}, component name {}, ...",

metadata.getLabel(), metadata.getName());

}

The following example illustrates how to retrieve and use input parameter metadata for user actions. This service returns metadata about RecordActions annotated with a user_input_object, configured as a user action.

ObjectLifecycleStateUserActionService service = ServiceLocator.locate(ObjectLifecycleStateUserActionService.class);

// Build the request

ObjectLifecycleUserActionInputParameterMetadataRequest request = service.newInputParameterMetadataRequestBuilder()

.withUserActionName("Objectlifecyclestateuseraction.object__c.active_state__c.action__c")

.build();

ObjectLifecycleUserActionInputParameterMetadata metadata = service.getInputParameterMetadata(request);

// Get the metadata for the input parameter

String userInputObjectName = metadata.getUserInputObjectName();

String userInputObjectType = metadata.getUserInputObjectTypeName():

// Use the metadata to create a record of that object

Record input = recordService.newRecord(userInputObjectName);

input.setValue("name__v", "new name");

input.setValue("field__c", "new value");

RecordBatchSaveRequest saveRequest = recordService.newRecordBatchSaveRequestBuilder()

.withRecords(VaultCollections.asList(input))

.build();

recordService.batchSaveRecords(saveRequest)

.rollbackOnErrors()

.execute();

String userInputObjectRecordId = input.getValue(RECORD_ID, ValueType.STRING);

// Use the userInputObjectRecordId to build a request to execute an action with an input

UserActionExecutionRequest request = userActionService.newExecutionRequestBuilder()

.withObjectName("object__c")

.withObjectRecordId("V6U000000001001")

.withUserInputRecordId(userInputObjectRecordId)

.withUserActionName("Objectaction.object__c.action__c") // Use the fully qualified name of the action

.build();

The following example illustrates how to execute an action. An action can be a user action, such as a state change, or an object action that is either available on all lifecycle states or configured on a particular state.

The UserActionExecutionRequest.Builder is used to construct the request, which is submitted in a UserActionExecutionRequest. Optionally, you can specify success and error handlers on the UserActionExecutionOperation before execution.

ObjectLifecycleStateUserActionService userActionService = ServiceLocator.locate(ObjectLifecycleStateUserActionService.class);

LogService logService = ServiceLocator.locate(LogService.class);

// Build user action execution request for the record

UserActionExecutionRequest request = userActionService.newExecutionRequestBuilder()

.withObjectName("object__c")

.withObjectRecordId("V6U000000001001")

.withUserInputRecordId(userInputObjectRecordId) // Optional: if the user action is a RecordAction annotated with a user_input_object.

.withUserActionName("Objectaction.object__c.action__c") // Use the fully qualified name of the action.

.build();

// Execute the user action

userActionService.executeUserAction(request)

.onSuccess(userActionExecutionResponse -> {

// Logic to be executed if the user action completes successfully.

logService.info("Successfully executed user action");

})

.onError(error -> {

// Logic to be executed if the user action encounters an error when executing.

if (error.getErrorType().equals(ActionErrorType.PERMISSION_DENIED)) {

logService.error("Failed to execute user action: " + error.getMessage());

}

})

.execute();

DocumentLifecycleMetadataService: Provides getter methods to retrieve metadata for document lifecycles. For example, you can retrieve metadata for all document roles for a lifecycle, or retrieve a collection of all document lifecycles available in a Vault.DocumentLifecycleStateUserActionMetadataService: Provides getter methods to retrieve metadata about a document lifecycle user action, document version lifecycle user action, and user action input.DocumentLifecycleStateUserActionService: Provides methods to execute a document lifecycle user action.The Vault Java SDK supports initiating document lifecycle user actions, which are available to users on a document in a specific lifecycle state. User actions can begin a workflow, move the document into a new lifecycle state, and more. Learn more about document lifecycle user actions in Vault Help.

DocumentLifecycleStateUserActionMetadataService provides methods to retrieve configuration metadata about user actions, while DocumentLifecycleStateUserActionService provides methods to execute those actions.

The following example illustrates how to retrieve configuration metadata about available lifecycle user actions.

DocumentLifecycleStateUserActionMetadataService service = ServiceLocator.locate(DocumentLifecycleStateUserActionMetadataService.class);

LogService logService = ServiceLocator.locate(LogService.class);

// Retrieve metadata about user actions on a particular lifecycle state

// Build the metadata request

DocumentLifecycleUserActionMetadataRequest request = service.newUserActionRequestBuilder()

.withLifecycleName(lifecycleName)

.withStateName(lifecycleState)

.build();

DocumentLifecycleUserActionMetadataResponse response = service.getUserActions(request);